summary

As part of an incubator project in collaboration with IBM Research, my team was tasked with making airports more accessible for people who are blind or have low vision. We used design thinking to understand our user's needs and align with our key stakeholders. Our final solution consisted of a voice-first mobile app, which worked in conjunction with BLE beacons for the accurate indoor location. During the project, we gathered insights from travelers who are blind and we were able to use our research findings to turn a touchscreen prototype into a voice-first solution.

problem

We were given an existing research prototype app and were tasked with making it more usable and useful. The app had been used during a controlled study deployment in a shopping mall, but the focus was now shifted to airports. The redesigned app was targeted to be deployed at multiple airports and gradually expanded in the future to other venues, including the Tokyo Olympics.

target audience

Then, we created a user persona to represent our target audience. Due to the time limitations of our project, we had to scope our focus to people who are blind and use a white cane as an aiding tool. We acknowledge that low vision and guide dog users were still to be targeted by our solution, but recommended that deeper focus be put onto those users in future iterations of the product. This decision was not easy, but we had to scope the project, as we only had 6 weeks to complete the work.

Part of the decision to focus on blind users, as opposed to low vision users was done, as focusing on people who are blind would also implicitly cover to some extent a user that is on the low vision spectrum. As per the white can vs guide dog users, that decision was primarily made, due to the fact that there are a lot more people using canes than there are people that can afford a guide dog. In addition, the all guide dog users that we spoke to also used canes.

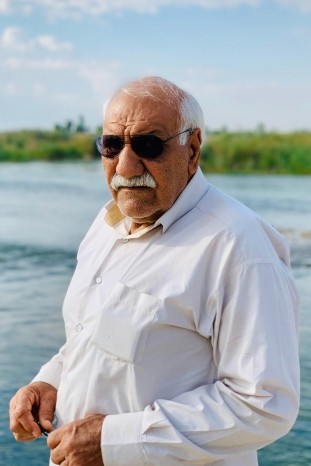

blind traveler gary

Gary is a 58 years old product manager who has been blind since birth. He frequently travels alone for work and personal pleasure. He wants to be able to independently navigate the airport, as well as explore his points of interest while there. He needs to be able to find obstacles in front of him, receive live flight updates and independently go through security and to his gate.

process

For this project, my team and I used IBM's Enterprise Design Thinking framework. Through that, we observed our target audience, reflected on our findings, and made prototypes to test our ideas. Since this project involved designing an innovative solution to a challenging problem, we gave great emphasis on talking to our users and understanding their needs. As part of our work, we ended up simulating an airport, by utilizing a research space. This enabled us to get a good environment to test our prototypes in action.

faking an airport

We knew that user testing would be a challenge, because we did not have an airport at our disposal, and we could not use a traditional click-through prototype. To overcome these challenges, we ended up constructing a fake airport at our design studio. We used voice dictation and a wizard of oz style instructions to guide our participants on their path to the "gate". Since participants followed the voice instructions, we were able to use invisible walls that didn't really exist in reality. We were able to simulate multiple turns, walls, points of interest, and airport announcements.

voice first design

For the redesign of NavCog, we focused on a voice-first interface. This helped us pack powerful features in an interface that does not rely on visual interactions or queues. The new voice design allowed users to naturally interact with the app, and navigate the airport independently.

intuitive navigation

We optimized our navigational instructions for on-foot navigation through large open spaces. The niche nature of our use cases in this respect allowed us to create a quality navigation that is ideal for our users.

adding stops

In addition to getting from point A to point B, we also designed functionality for people to add stops on the way. This was key in helping users explore an airport and not just transit through it, as this is a key element of being truly independent.

visual interface for low-vision users

We also designed a visual prototype for low-vision users. This was a secondary goal that we had the bandwidth to achieve, but the majority of our time ended up being spent on voice design.